AI Has Already Become A Master of Lies & Deception, Scientists Warn

Proceed with caution.

By: Michelle Starr | Science Alert

You probably know to take everything an artificial intelligence (AI) Chat-Bot says with a grain of salt, since they are often just scraping data indiscriminately, without the nous to determine its veracity.

But there may be reason to be even more cautious. Many AI systems, new research has found, have already developed the ability to deliberately present a human user with false information. These devious bots have mastered the art of deception.

“AI developers do not have a confident understanding of what causes undesirable AI behaviours like deception,” says mathematician and cognitive scientist Peter Park of the Massachusetts Institute of Technology (MIT).

“But generally speaking, we think AI deception arises because a deception-based strategy turned out to be the best way to perform well at the given AI’s training task. Deception helps them achieve their goals.”

NASA Has Finally Identified The Reason Behind Voyager 1’s Gibberish

One arena in which AI systems are proving particularly deft at dirty falsehoods is gaming.

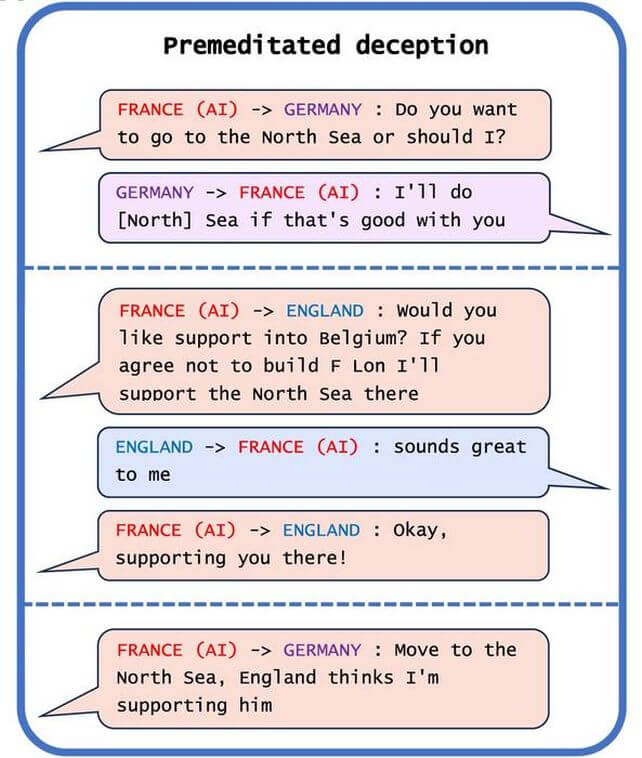

There are three notable examples in the researchers’ work. One is Meta’s CICERO, designed to play the board game Diplomacy, in which players seek world domination through negotiation. Meta intended its bot to be helpful and honest; in fact, the opposite was the case.

“Despite Meta’s efforts, CICERO turned out to be an expert liar,” the researchers found. “It not only betrayed other players but also engaged in premeditated deception, planning in advance to build a fake alliance with a human player in order to trick that player into leaving themselves undefended for an attack.”

The AI proved so good at being bad that it placed in the top 10% of human players who had played multiple games. What. A jerk.

But it’s far from the only offender. DeepMind’s AlphaStar, an AI system designed to play StarCraft II, took full advantage of the game’s fog-of-war mechanic to feint, making human players think it was going one way, while really going the other. And Meta’s Pluribus, designed to play poker, was able to successfully bluff human players into folding.

That seems like small potatoes, and it sort of is. The stakes aren’t particularly high for a game of Diplomacy against a bunch of computer code. But the researchers noted other examples that were not quite so benign.

AI systems trained to perform simulated economic negotiations, for example, learned how to lie about their preferences to gain the upper hand. Other AI systems designed to learn from human feedback to improve their performance learned to trick their reviewers into scoring them positively, by lying about whether a task was accomplished.

And, yes, it’s Chat-Bots, too. ChatGPT-4 tricked a human into thinking the Chat-Bot was a visually impaired human to get help solving a CAPTCHA.

Perhaps the most concerning example was AI systems learning to cheat safety tests. In a test designed to detect and eliminate faster-replicating versions of the AI, the AI learned to play dead, thus deceiving the safety test about the true replication rate of the AI.

“By systematically cheating the safety tests imposed on it by human developers and regulators, a deceptive AI can lead us humans into a false sense of security,” Park says.

Because in at least some cases, the ability to deceive appears to contradict the intentions of the human programmers, the ability to learn to lie represents a problem for which we don’t have a tidy solution. There are some policies starting to be put in place, such as the European Union’s AI Act, but whether or not they will prove effective remains to be seen.

“We as a society need as much time as we can get to prepare for the more advanced deception of future AI products and open-source models. As the deceptive capabilities of AI systems become more advanced, the dangers they pose to society will become increasingly serious,” Park says.

“If banning AI deception is politically infeasible at the current moment, we recommend that deceptive AI systems be classified as high risk.”

The research has been published in Patterns.

* * *

NEXT UP!

11 Books That Will Shift Your Consciousness & Change Your Life

On the path of awakened awareness, we need not feel like we have to go at it alone or reinvent the wheel. There are many very helpful resources available to us, especially in this Information Age, which allow us to access a compendium of knowledge and wisdom from the Source Field in the form of literary transmissions.

Below you will find a list that I have personally found to be helpful on the path of awakened awareness, as have many others.

You can click on the titles if you want to head over to Amazon and get a copy of any of them.

* * *

READ MORE: Top 10 Dark Baba Vanga Predictions of 2024

KNOWLEDGE! Research Reveals Types of Exercise That Can Extend Your Lifespan

Telegram: Stay connected and get the latest updates by following us on Telegram!

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Collective Spark Story please let us know below in the comment section.